[doc/results] Add missing sources

Showing

- .gitignore 2 additions, 2 deletions.gitignore

- doc/results/baselines/chasedb1.pdf 0 additions, 0 deletionsdoc/results/baselines/chasedb1.pdf

- doc/results/baselines/chasedb1.png 0 additions, 0 deletionsdoc/results/baselines/chasedb1.png

- doc/results/baselines/drive.pdf 0 additions, 0 deletionsdoc/results/baselines/drive.pdf

- doc/results/baselines/drive.png 0 additions, 0 deletionsdoc/results/baselines/drive.png

- doc/results/baselines/hrf.pdf 0 additions, 0 deletionsdoc/results/baselines/hrf.pdf

- doc/results/baselines/hrf.png 0 additions, 0 deletionsdoc/results/baselines/hrf.png

- doc/results/baselines/iostar-vessel.pdf 0 additions, 0 deletionsdoc/results/baselines/iostar-vessel.pdf

- doc/results/baselines/iostar-vessel.png 0 additions, 0 deletionsdoc/results/baselines/iostar-vessel.png

- doc/results/baselines/stare.pdf 0 additions, 0 deletionsdoc/results/baselines/stare.pdf

- doc/results/baselines/stare.png 0 additions, 0 deletionsdoc/results/baselines/stare.png

- doc/results/xtest/index.rst 90 additions, 0 deletionsdoc/results/xtest/index.rst

doc/results/baselines/chasedb1.pdf

0 → 100644

File added

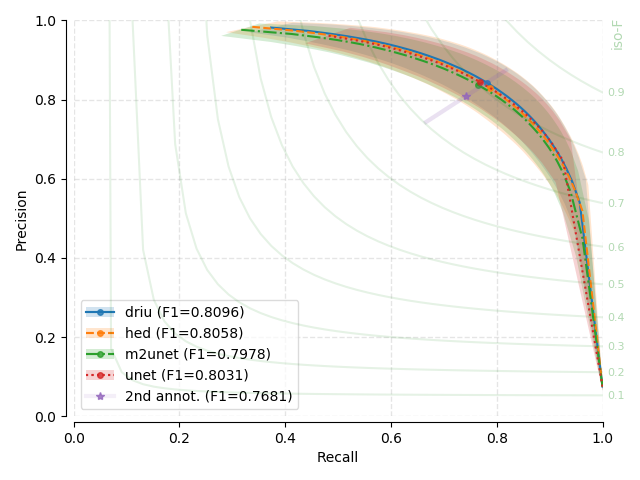

doc/results/baselines/chasedb1.png

0 → 100644

91.6 KiB

doc/results/baselines/drive.pdf

0 → 100644

File added

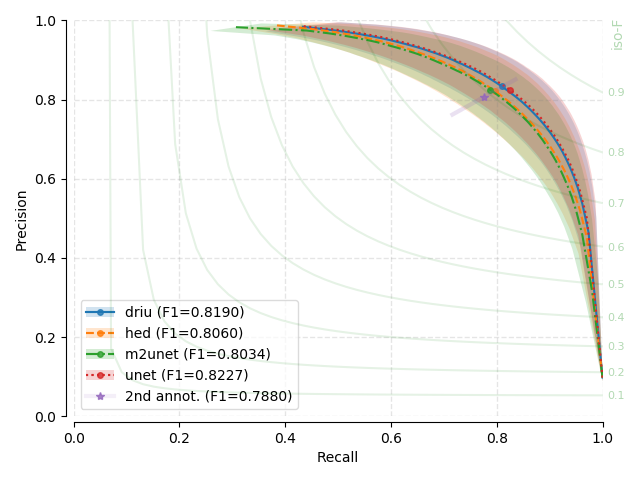

doc/results/baselines/drive.png

0 → 100644

93.5 KiB

doc/results/baselines/hrf.pdf

0 → 100644

File added

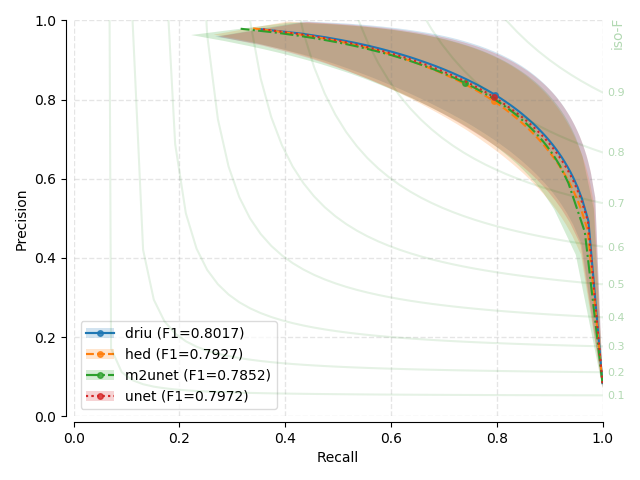

doc/results/baselines/hrf.png

0 → 100644

85.7 KiB

doc/results/baselines/iostar-vessel.pdf

0 → 100644

File added

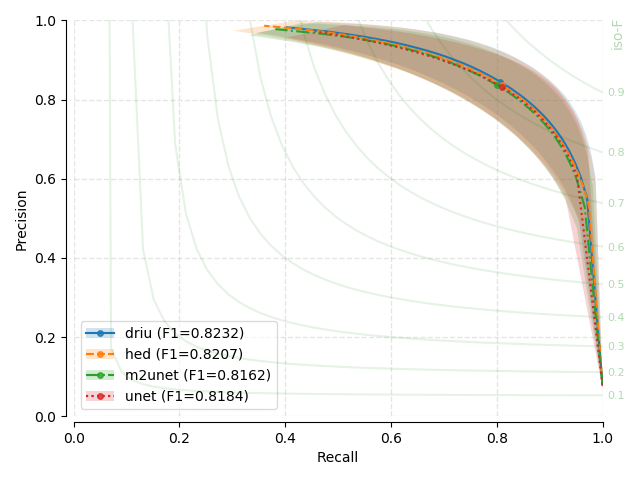

doc/results/baselines/iostar-vessel.png

0 → 100644

85.2 KiB

doc/results/baselines/stare.pdf

0 → 100644

File added

doc/results/baselines/stare.png

0 → 100644

92.4 KiB

doc/results/xtest/index.rst

0 → 100644